Google Trillium sixth-generation TPUs are now generally available through Google Cloud Platform. Driven by the rising demands of generative AI, Trillium was designed to provide businesses and developers with the necessary power to advance AI development.

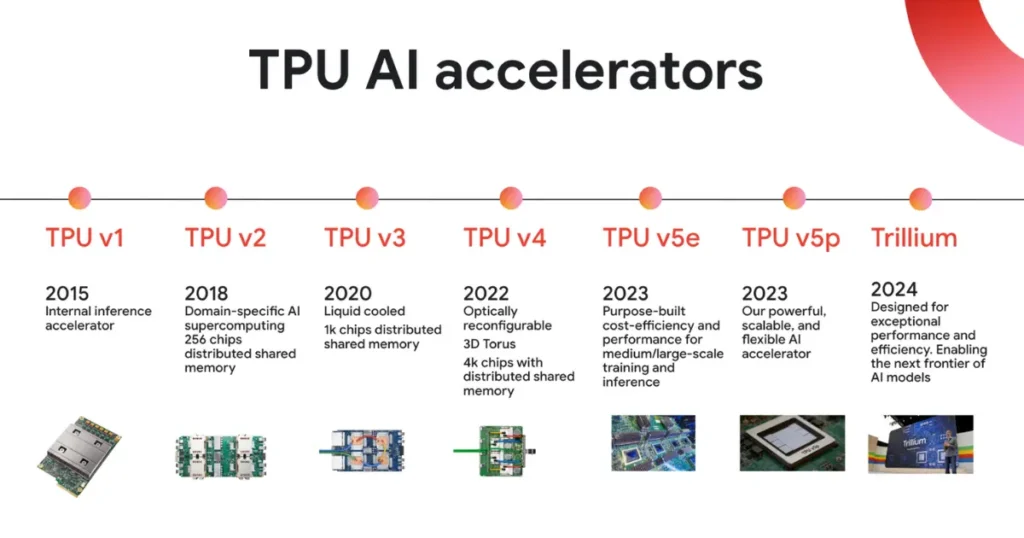

Google’s Path to Cloud TPUs

Google first started developing Tensor Processing Units, or TPUs, over a decade ago to address the rising demands of AI workloads. Custom AI accelerators have since become the backbone for AI development at Google, with performance and efficiency advancing in each new generation that’s released. While the TPUs were initially developed to enhance Google’s own machine learning capabilities, they are now available to third-parties through Google Cloud Platform.

Unmatched Performance and Efficiency

Trillium is purpose-built to run the unique matrix and vector-based maths requited for AI workloads while simultaneously optimizing performance and cost. The newest TPUs outperform previous generations in several areas:

- 4x improvement in training performance

- Up to 3x increase in inference throughput

- 67% increase in energy efficency

- 4.7x increase in peak compute performance

- 2.5x improvement in training performance per dollar

Early customers, like AI21 Labs, praised the unmatched scalability, efficiency and power of Trillium. “[They] will be essential in accelerating the development of our next generation of sophisticated language models,” said Barak Lenz, CTO of AI21 Labs.

Powering Diverse AI Workloads

Trillium is optimized to address the challenges faced by developers presented by the rise of large-scale AI models:

- Scaling AI training workloads

- Training LLMs including dense and Mixture of Experts (MoE) models

- Embedding-intensive models

- Delivering training and inference price-performance

- Inference performance and collection scheduling

Success with Gemini 2.0

The success of the newly launched Gemini 2.0, Google’s most advanced and capable model yet, is thanks to Trillium. “TPUs powered 100% of Gemini 2.0 training and inference” said Sundar Pichai, Google’s CEO. Trillium delivers remarkable improvements compared to its predecessors, highlighting the momentum in Google’s race towards future-proofing AI innovation.

Industry Adoption of Trillium TPUs

The advanced capabilities of Trillium are set to be instrumental in the building and training processes of next generation AI models and agents. Early adopters have reported their intent to further AI advancements using the TPUs:

- Essential AI: Reinvent business operations with AI

- Nuro: Enhance everyday life through robotics

- Deep Genomics: Power the future of drug discovery supported by AI

What’s next for Trillium?

Trillium TPUs are designed for scalability and high-performance, ensuring they can handle the rising demands of diverse AI workloads. Through GCP, Google Trillium sixth-generation TPUs are set to play a crucial role in advancing AI innovation across many applications. With this GA launch, Google remains steadfast in their commitment to provide industry with cutting-edge, future-proofed technology solutions.

Further Reading

Read more from Google’s launch announcement: Trillium TPUs are now GA

An overview of Google’s new AI model for the agentic era: Introducing Gemini 2.0